-

AutorBeiträge

-

-

23. September 2020 um 22:45 Uhr - Views: 697 #8766

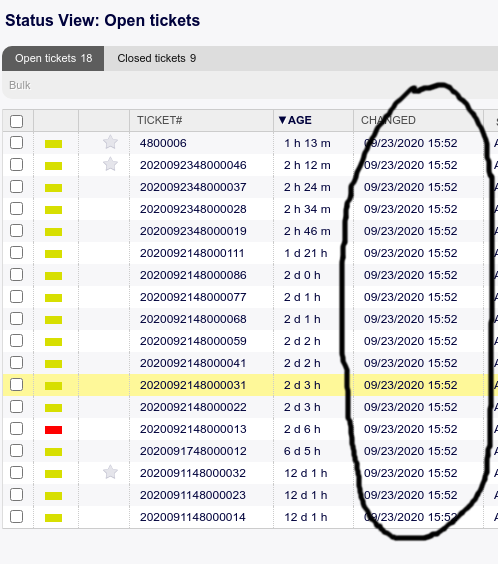

All tickets are being updated automatically..

Take a look at the image below:

It’s weird. What’s wrong?

-

24. September 2020 um 9:05 Uhr #8771

Hi again,

there are a lot of processes that can cause this. Without further information it is impossible to tell. If you select a ticket, you can have a look at the ticket history (usually in the miscellaneous menu). This should give you a hint about what is going on.

Best regards, Sven

-

25. September 2020 um 22:44 Uhr #8785

I’m so sorry for multiple post. I’m so excited about this software, and I want to put that in production as soon as possible.

So about my trouble, it’s very strange because it doesn’t record any entry in the history screen for that time.

Agent Dashboard:

History:

Any hint?

-

26. September 2020 um 7:22 Uhr #8786

Hi Squidy,

that’s not a standard behavior. Do you have some „strange“ generic agent jobs configured for example?

How often reset this value to a new one?

I think you need to check your system configuration again…:(

Best wishes,

Stefan

Team OTOBO

-

26. September 2020 um 9:34 Uhr #8787

Actually, if there’s no entry in the history, I would guess, that it is either a database problem (the values are read from there), or some process is not correctly producing a history entry. Both of which would be unwanted.

I am right, that you are using docker, are you? Is this already 10.0.3, and did this behaviour start to occur with this patch? The docker version is still under more development, so I would not completely exclude any regressions, here.

From the two pictures you sent I infer, that this happened not only once. If it is chowing up at a certain rate, you could do the following, to get more clues:

- Start a shell in the docker-db-container: docker exec -it otobo_db_1 bash

- Open up the database with either the root or otobo user and password, e.g.: mysql -u otobo -p otobo #(You can get the otobo password and db details from Kernel/Config.pm within the otobo-container, if you don’t know.)

- Get all ticket parameters for one ticket, from your second picture, e.g.: select * from ticket where tn=2020092402000022

- Logout, wait for the thing to happen again, repeat, and compare, if you see any changes.

Hopefully this way we get more information. (Ah, have a look, whether other processes changed something in the history again, when doing this, of course.)

Best regards, Sven

-

28. September 2020 um 3:47 Uhr #8788

I’m not using docker.. I’ve installed the version 10.0.3 and did a migration from otrs 6. However, it was happening with 10.0.2 without migration.

I did what you suggested (query ticket table twice and compare).. The change occurs only on the column change_time on every 4 minutes.

# diff ticket1.txt ticket2.txt

25c25

< change_time: 2020-09-27 21:28:03

—

> change_time: 2020-09-27 21:32:03Also I’ve disabled all generic agent jobs, but the behaviour happens again.

-

28. September 2020 um 10:31 Uhr #8789

Hey squidy,

ok, I’m pretty sure that is a bug, now. A minor one, though. Could you please go to the SysConfig, disable Daemon::SchedulerCronTaskManager::Task###RebuildEscalationIndex and see, if it continues, or not? We included some extra functionality from another module there, and I guess there is some check missing, whether things actually changed in the ticket, before „the changes“ are written. This should not break anything, but it is erroneous behaviour anyways. We are a little busy at the moment, but if my guess is correct, I will try to find someone to have a look at it this week.

Best regards, Sven

-

28. September 2020 um 14:18 Uhr #8796

Hi Sven.

I disabled Daemon::SchedulerCronTaskManager::Task###RebuildEscalationIndex and the issue no more happens.

-

29. September 2020 um 15:01 Uhr #8800

Hi squidy,

just to let you know, I opened an issue https://github.com/RotherOSS/otobo/issues/490 – if you are aware of any settings you made regarding the escalation index (I’m especially thinking about the escalation suspend functionality), please let us know. Else I’m confident, that we will find it anyhow.

Sven

-

30. September 2020 um 16:25 Uhr #8801

Sincerely, if I remember I didn’t make any changes about suspend ticket escalation. :-(

-

9. Oktober 2020 um 12:39 Uhr #9106

Hey Squidy,

just for your information: The error came from the escalation index rebuild, which previously was just used when the package was installed. As you don’t use the escalation suspend functionality, disabling this task effectively solved the problem for you (though really nothing much happened anyways). For 10.0.4 we now check whether the rebuild is needed, so it should not occur anymore even with the task enabled.

Best regards, Sven

-

-

AutorBeiträge

- Du musst angemeldet sein, um auf dieses Thema antworten zu können.